Over the past two years, the link between virtual worlds and live events has become increasingly close. Covid19 has fast-tracked what was already a road in the making, but some questions still remain. Among them, how to recreate virtually the kind of physical involvement that certain live events are able to convey. We reached out to one of the leading companies in this field, Evoke Studios, currently involved in the international tour of (the wonderful, my poor heart) Ed Sheeran. Let’s discover these studios together and find out more about how they operate through the words of Vincent Steenhoeck, founder and managing director, and Urs Nyffenegger, co-founder and head of content.

The International approach behind Evoke Studios

URS NYFFENEGGER – Evoke is a young company founded in 2018. We started by operating in the field of live events, but when the pandemic in 2019 stopped them completely, we moved to virtual worlds and technologies, streaming, virtual studios… and this has been our core of operation for the last two years.

VINCENT STEENHOECK – Even if we’re a relatively young studio, everybody working in it has been around the live-events field for quite some time. We currently have a team between 11 and 20 people, depending on the project, but we’re also a fully remote company. An element that really differentiates us from other studios.

The main partners – me, Urs, Chema (Menendez, creative workflow manager) and Kristaps (Liseks, technical director) – come from four different countries and the team includes people from Great Britain, Portugal, Germany and so on. This international presence allowed us to cover quite a wide area in terms of events and projects that we could undertake when we started. When the pandemic hit, we were already there, in the right place at the right time to start producing extended reality experiences – which for us takes on a slightly different meaning than it usually does in video games or VR experiences. In general, we work a lot with augmented reality and green screens, but everything is based on the same fundamentals of camera tracking, real-time rendering engine and programming.

We really care about creating shared experiences and in fact we’re also members of AIXR (a/n The Academy of International Extended Reality, an association providing membership services to organizations leveraging VR, AR, and the Metaverse).

Overcoming the boundaries of immersive technologies

V. S. – What we do is create technical solutions and put them in different contexts to achieve different results. We worked on Ed Sheeran’s recent tour and it was great to use some of the technologies surrounding XR, like photogrammetry and motion capture, to create that kind of content. So, the question is not just “how do we use these technologies”. It is also “how can I use them in an engaging way?”.

That’s why we’re looking at the metaverse as the next step – a lot of the work we do fits within this kind of space, even though much of the conversation around it today revolves around the use of VR. We’re also interested in wearables and AR glasses to create different kinds of immersive experiences with less focus on broadcast-scale cameras and more on the personal perspective of an event.

We want to see what kind of innovation is possible, but also see what experiential limits we encounter, to try and overcome them. Again, the basic principles are still the same, it’s just that it’s important to understand how to approach them to create interesting results.

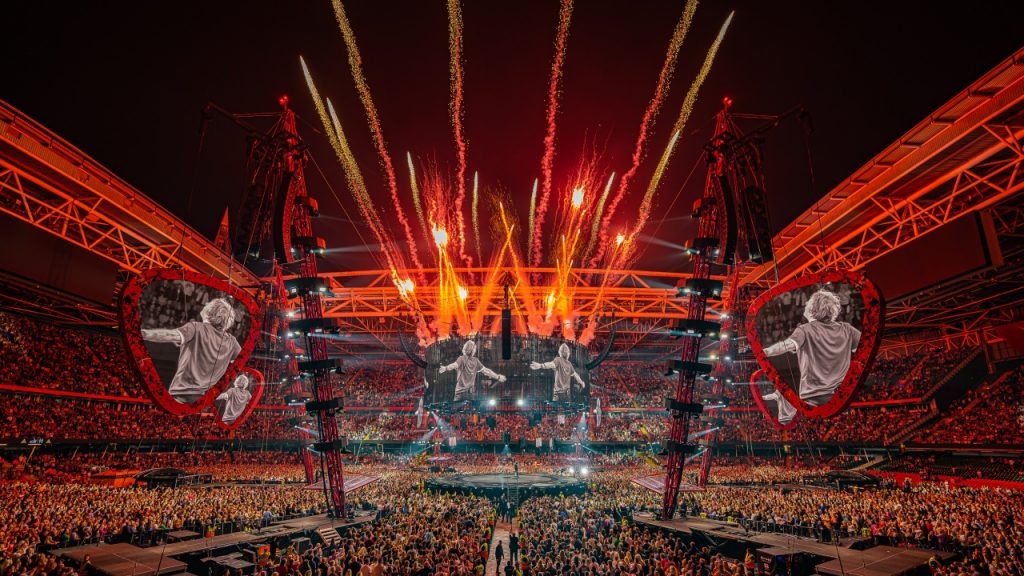

U. N. – For Ed Sheeran’s tour we are doing part of the visuals for the stage. The process behind this kind of work is always the same and it’s a creative one. To simplify it: the songs inspire the moodboards, the style, you do a virtual preview of the experience and then you get into it and check that everything created beforehand actually works.

This is the basic approach. But as far as the XR part is concerned, what’s exciting is that we are using more and more real-time tools, such as Unreal Engine or Notch, both for the style and the content we develop, applying them in a different way from how they were originally conceived, such as to get the best out of round stages – which can be difficult, since round stages have their own rules and editing requirements.

How to present the possibilities offered by today’s technologies to their possible users

V. S. – We are a relatively small group of people, but our projects are very broad and this is because we are a very responsive studio. We work with several tools and we like to use the right one for the job.

We also focus on projects of a certain scale. Our background in live events – large-scale trends, like projection mapping and tracking installations – gives us a good frame of reference to understand what the risks are before going into specific digital projects. We plan accordingly, do a lot of testing and it is really exciting to see what we can achieve.

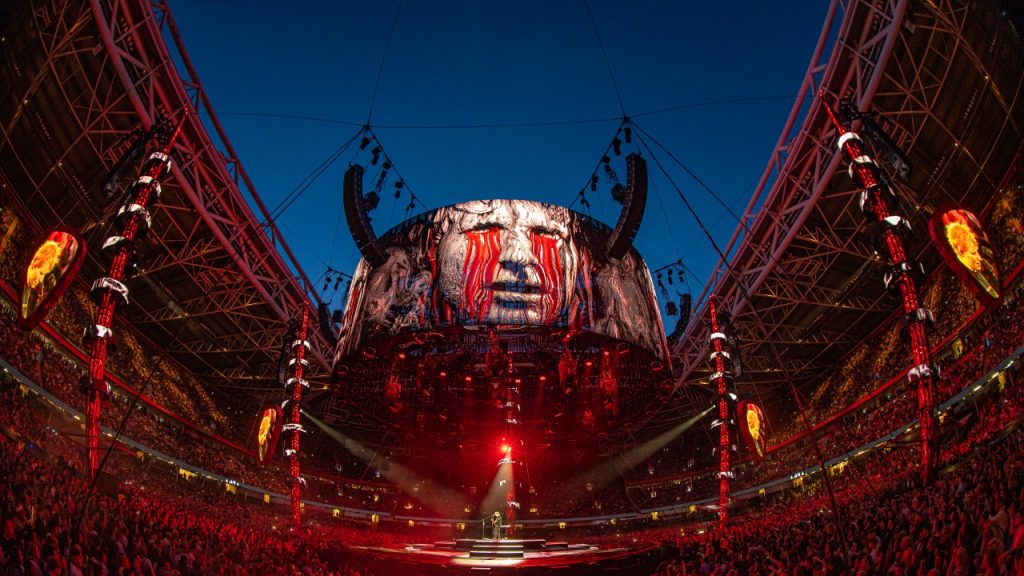

U. N. – For instance, we had the opportunity to do a complete photogrammetry of our client, Ed Sheeran himself. We wanted to push this tool among the ones we offered, and he jumped at the idea because of the incredible things that could be done with it – there is a whole song embodied by his face and it was a great opportunity to be able to do it.

V. S. – We take an approach that allows us to reuse data in many different ways and for different concepts within the same project. We can enable performance and face tracking and new integrations and create more engaging experiences with all this data at our disposal. But for our customers it also means that they don’t spend money on something they can only use once. For them it is rather a solid investment. We can work to push the boundaries and do new interesting things, because our customers understand the possibilities this offers them.

It’s still true that many solutions are mystified – which is fine, there is value in that too. But we want people to understand what they are buying. It is important to us that everyone can participate in the conversation in an informed way. The depth of your technical knowledge should not define whether you can talk about XR in depth or not! Also, XR, metaverse, immersive technologies… They all raise a lot of questions and only a few people really understand what they mean. We certainly don’t have all the answers, but we do have some and so we try to involve our customers and if there is a need to hold their hand, that’s perfectly fine! The important thing is that they know what they can expect from us and that we provide what they really need.

A deeper insight into Ed Sheeran’s international tour

V. S. – As is often the case with jobs as complex as this, we had to overcome a number of obstacles.

One of the main challenges our visual content teams faced during the pre-production process was caused by the sheer size of the stage itself. There isn’t a rehearsal studio in existence that would have allowed us to build the full stadium stage and gauge how the screens would look from the furthest seats in the venue.

This created a lot of problems, including what frame rates to use and how to scale the text and content itself. We wanted the video to look impressive whether the audience was standing 10m away or 100m away, and this was something that could only be fine-tuned once we were on the road.

We were only able to overcome these potential obstacles thanks to a strong work ethic and collaborative spirit; both internally and with our amazing external partner, Twotrucks Productions.

Regarding the songs we made, our brief was to design treatments for 10 songs. These were to be largely 3D based as that’s a style of work that Mark Cunniffe (a/n production designer for Ed Sheeran’s tour) prefers to see and is our strength as a company, alongside designing matching Notch iMAG treatments. It was also our task to help design 5-6 of the large moments in the show.

Mark has been very generous in allocating some of Ed’s biggest hits to us, so even if we take care of what rounds up to just about a third of the overall playtime Ed is on stage, we’re given a lot of room to create impact and audience engagement.

We created treatments for:

Bad Habits – we have exploding balloons, bright colours, undead bodies flying everywhere, it’s a Halloween inspired party to get the audience dancing.

Sing – A strong 2D, black and white motion graphics look, with animated words dancing across the screens, pushing the LED screens for all the light they can emit, and edge detecting looks on Ed on the iMAG screens.

Galway Girl – Think Irish gift shop. A nice playful look, with a lot of motion and colour in the motion graphics, and a desaturated look for the iMAG on Ed.

Overpass Graffiti – Dancing graffiti words and arrows responsive to the lyrics, paint splashes and deep colours all around.

A Team – A very calm look presenting an all-around flowing gauze, with a lot of shadow play of shapes of branches and feminine outlines moving across the screen.

Shape of You – Bright colours, gradients of blues and purples, and flowing ribbons. Creates a lot of depth by using sliced animations of 3d models of attractive faces and we see Ed’s image on the flowing ribbon animations, fluidly moving in and out of view.

I See Fire – A beautiful animation of snow covered mountain tops and fiery orbs in which we see Ed playing, in-between rolling scene transitions and culminating into a fiery explosion of magma.

Thinking Out Loud – A particle based movement piece, inspired by the original video clip of the song. We went through a motion capture process to capture the essence of the video, which is found in the movement of the ballerina and Ed. We created a kind of in the round shiny floor ballroom look, in which the outlines of the dancers light up through particles, and show their beautiful movements while Ed plays the song.

Shivers – A colourful, fully ray-traced glass and diamonds based look, with large sweeping movements and resolution.

Bloodstream – An iconic look for which we took Ed through a Photogrammetry process to capture some of his emotions and facial expressions, which are so deeply rooted in the song and to create 3D assets of a very high accuracy. By heavily stylising and animating the 3D models we generated a massive blood red look, flowing with chemicals and mind shattering emotions, while keeping Ed recognisable and connected to his audience.

On the importance of the team and recognizing your added value

V. S. – We do very specialised work, carried out with great expertise. Having these extraordinary customers means that you can’t fail… but you still want to try new and interesting things. In order to do that, you have to know the tools very well and the only way is to keep delving into them and working closely with the manufacturers, coders or whoever is behind that particular engine or product. So that’s what we do.

U. N. – We were also inspired by the good people that helped us in this.

V. S. – We have the best team and the best people on the planet! (laughs) …I really believe that! The reason why customers come to us is that even when we send one single operator on a project, who is trained to solve problems and get answers on the spot, there is still a whole team of people behind them for support: they make sure whatever happens is dealt with. I’m personally very proud of what the team pulled off during the Epiroc Days Summit: we onboarded ourselves into working in a new workflow and an engine that we didn’t necessarily use in depth before, at least with a solution of that type. Urs was on the team on site and they did an outstanding job. The results were incredible, some people who saw the videos couldn’t say what was real and what was AR! I don’t know what else I could possibly ask for… Getting to that point is really hard and I think it shows how much effort we put in it!

U. N. – I think where we came from, professionally speaking, was really important to achieve these results. When we first started working together in 2018, we already had a lot of experience all over the place.

A look at the future of technology and possibilities

V. S. – I can’t tell you what is going to happen in five years from now, but I can tell you what I personally want. I want lenses and I want Jarvis (a/n artificial intelligence created by Tony Stark in Marvel’s Iron Man). I want the heads up display and either a neural network connected to my thoughts or something I can talk to.

U. N. – I’m not so sure I want that, actually! (laughs)

V. S. – It raises ethical problems, and it would be a very complex technology, but imagine what it could offer experientially and philosophically! I really believe in AR glasses as a solution. We’re not to that point yet, but I think we’ll see a lot of societal adoption of those types of solutions in the next five years.

U. N. – I just want better quality in rendering and images! I am thinking of Fortnite, the concerts, the metaverse, the live industry… The last two years have told us a lot about them. I don’t know where we’re going technologically, but I’m 100% sure that in five years’ time events and venues like that will have wonderful lighting, incredible stages… everything will look just perfect! If you look at what we’ve achieved in the last two years on the rendering engine front – just think of the crazy leap from Unreal Engine 4 to Unreal Engine 5 – and then extrapolate that to the next five years… you can see that we’ll be able to experience entire movies in there and no one will notice the difference with real life. Also, metahumans, artificial humans, all these things are getting better and better, so I think we might as well learn how to walk through the uncanny valley and make it believable. We’re not there yet, but we’ll be…

V. S. – It’s like in Moore’s law, ‘every two years the number of transistors on an integrated circuit doubles‘… The speed of development in this industry is truly incredible! What Urs said about the image quality, though, is very pertinent. I still have problems with suspension of disbelief with stand-alone headsets and I think this aspect needs to be improved in the coming years.

U. N. – Technology will improve… but will humans be on board? What does technology have to look like to be fully accepted by its potential users? The iPhone is an example of a technology that was there but at some point became a disruptive technology that everyone now uses. But this does not always happen.

V. S. – I think it is a generational process and that the next generation will naturally be more connected. After all, it’s inevitable, it’s a natural progression… if there are no big problems along the way.

A lack of stories or a lack of storytellers: “”that is the question”

V. S. – Is there really a problem of lack of stories? We’re still reading Shakespeare and I’m sure Shakespeare hasn’t been translated into VR that much, yet. What I wonder is whether there are enough people who can tell stories using immersive media. For me it’s not about how to contextualise stories within a specific medium, it’s about having enough people working in the field, understanding it and doing it well. It is true that stories can work better if they are told in one form or another, but what ‘better’ means I think is incredibly personal, and depends on who is watching, who is performing, who is listening.

U. N. – It is interesting to think about old stories told in new formats. At the moment we are learning how immersive technologies work in the same way that people in the last century learned about cinema and how to tell stories with it. How do you approach a story in VR? I think games work because they ask you to do something within them. Would a 360 film have the same effect? As far as I am concerned, interaction is a key element for a story to remain relevant to you for long enough.

Transposing real experiences into virtual worlds

V. S. – The power of a good book is that it plays a film in your head. All you need to feel scared, loved, happy or sad are words. What could be more magical than that? Everything we do with other media only tries to imitate that experience, in an attempt to transport someone inside a narrative that with a book is already inside you. What we have to ask ourselves is: how can we do that, how can we make our users care about the characters? How can we convince them to stay in that world?

To me, it’s important is to be clear about what experiences we are creating and also to assess whether the technology is really adding to the narrative we are creating or rather confusing the rules, whether it is properly supporting the user’s senses and playing with them.

I think this is where the metaverse presents some issues. Take Travis Scott’s performance in Fortnite: it’s a great event, but is it a performance or a game? From an experiential point of view it is very fascinating, but as a performance does it have a good design? The rainbow of colours you see is beautiful, but it has nothing to do with the stage, so for instance does it relate to the kind of work we did for the Ed Sheeran tour or is it something completely different?

This is something we have to consider with the metaverse: the kind of experience you want to build. You have to define the rules of the world you create, even from a physical point of view, because if not the experience itself will be meaningless. It will be fun, but will people really listen to the music? Will they be interested in the artist and their presence or will they just play with that reality for five minutes, trying to figure out which buttons to press? That’s a topic in itself, and a very interesting one.

On the importance of shared events and the possibilities offered by AR

U. N. – For every show of the Ed Sheeran’s tour, we rehearsed to see if things worked and what we needed to do. But the moment the real audience comes in, everything changes. It’s a bit magical. If the people in the arena are a bit tired because it’s Thursday and the next day they work, the artist will not perform as well as with a fresher audience. They can feel the people. So, how do you transport that into a virtual world?

V. S. – At the end of the day it’s funny that Evoke creates all these massive-scaled shared experiences in isolation. We create them without the biggest element that brings them to life: their shared nature.

This is actually the reason why I think AR will lead VR, at least for a while: when I’m wearing AR glasses I can see the world around me, but also the real people I care about that visited it with me and that I am dancing with. It is an experience that I am sharing with them… but if I don’t really see these people and if I don’t feel the bass in my chest when the music is playing, am I even there? The experience is made by the speakers, the graphics, the lights, but also by those around you. I think this scale of experience should be the goal of the metaverse. There are millions of visitors, but it’s still people behind a computer, not in the same room… and those who work in live events understand why it’s not the same. At the end of the day, in each of these two worlds – the live experience world and the virtual world – there are qualities that are not found in the other and, until they are, both will exist, and we will continue to experiment with them.

To find out more what Evoke Studios is working on visit their official website. As for Ed Sheeran, there’s still time to go and see him live. Visit the tour page to discover the incoming dates!

Leave a Reply

You must be logged in to post a comment.